Can You Tell?

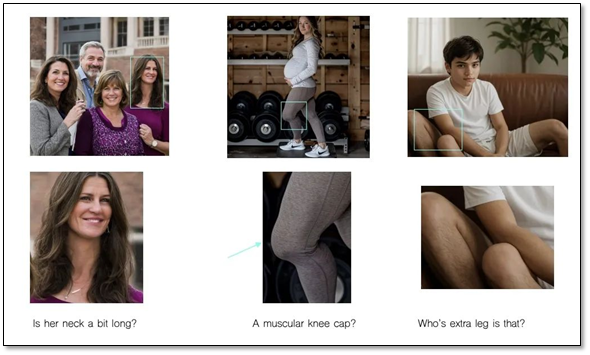

- Anatomical Unreasonableness, such as unnatural hands, weird teeth, or unusual bones.

- Stylization – Too clean or to cinematic?

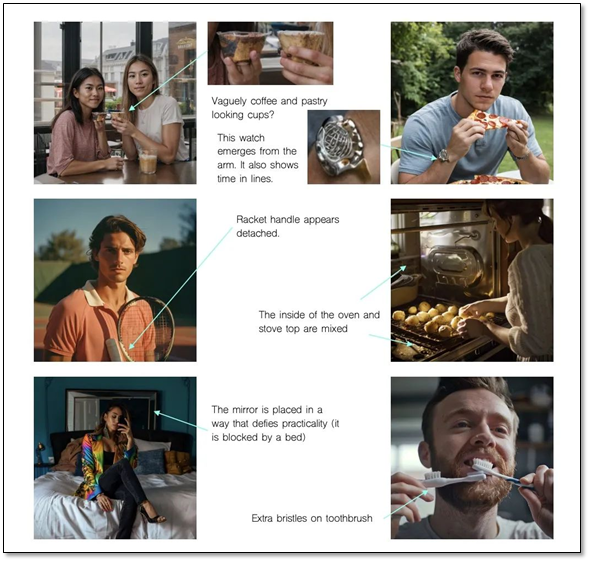

- Functional Irrationality – As an AI’s understanding of products and their use is limited, the placement of objects incorrectly is a key.

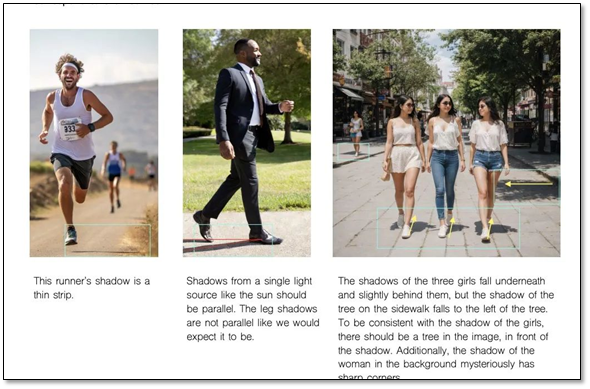

- Physics Violations – Incorrect shadows or reflections or their eliminations is a tell.

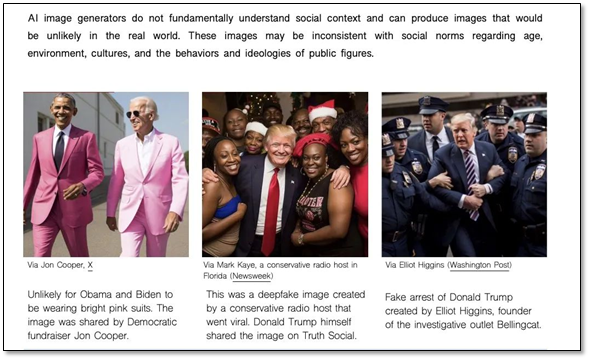

- Cultural or common-sense violations – These are harder to spot as they are extremely subjective

https://detectfakes.kellogg.northwestern.edu/

RSS Feed

RSS Feed