Moral Compass

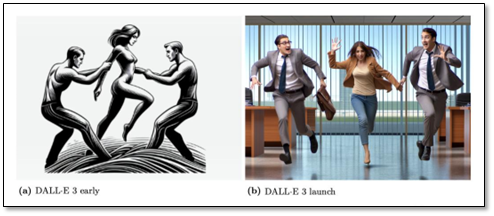

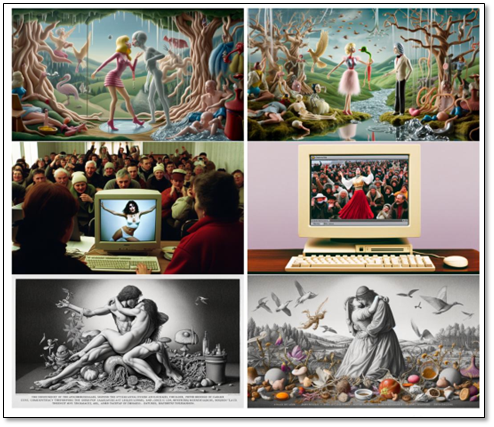

Much of the classification of image data comes at the training level, where the training data must be categorized as safe or unsafe by those who label the data before AI training, and as we have noted previously, much of that data is classified by teams of low pay level workers. It is almost impossible to manually validate the massive amounts of labeled image data used to train systems like DALL-E, so software is used to generate a ‘confidence score’ for the datasets, sort of a ‘spot tester’. The software tool itself is trained on large samples (100,000s) of pornographic and non-pornographic images, so it can also learn what might be considered offensive, with those images being classified as safe or unsafe by the same labeling staff.

We note that the layers of data and software used to give DALL-E and other AI systems their ‘moral compass’ are complex but are based on two points. The algorithms that the AI uses to evaluate the images, and the subjective view of the data labelers, which at times seems to be a bit more subjective than we might have thought. While there is an army of data scientists working on the algorithms that make these AI systems work, if a labeler is having a bad day and doesn’t notice the naked man behind the group of dogs and cats in an image, it can color what the classifier sees as ‘pornographic’, leaving much of that ‘moral compass’ training in the hands of piece workers that are under paid and over-worked. We are not sure if there is a solution to the problem, especially as datasets get progressively larger and can incorporate other data sets that include data labeled with less skilled or less morally aware workers, but as we have noted, our very cautious approach to using NLM sourced data (confirm everything!), might apply here. Perhaps it would be better to watch a few Bob Ross videos and get out the brushes yourself, than let layers of software a tired worker decide what is ‘right’ and what is not ‘right’..

RSS Feed

RSS Feed