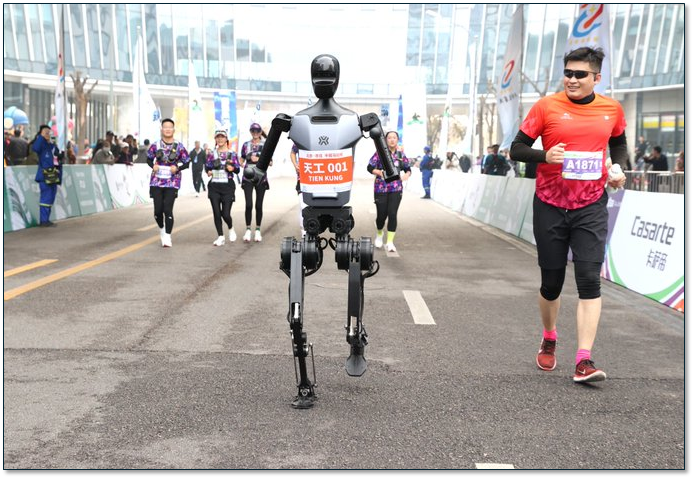

Run For Your Life

In the upcoming race the humanoid robots will run the entire race for the first time. They must be bi-pedal and able to walk or run upright, resemble humans, and are not allowed to have wheels. They can be anywhere from 20 inches to 6.5 feet tall but cannot have the distance between the hip joint and foot (sole) being more than 15.7”. They can be remotely controlled or fully autonomous but are able to take a break to have batteries replaced if needed. Other than that there are no restrictions as to the mechanics, with entries expected from robotics companies from all over the world, and they will not have to get up at 4AM to train, drink gallons of protein-powder shakes, or buy expensive running shoes..

E-town believes that this will be the first time humanoid robots and humans will compete in a full half-marathon competition and will award the top three winners a prize, although we are unsure what the prize will be for any robot winners, but the competition is another visible step for China’s robotics industry, much of which is located in Beijing. The district has more than 140 robotic ecosystem companies, whose output is valued at ~$1.4b US and is focusing on building AI into high-end humanoid robots and further building the local robotics environment. While there are many robotic development projects, humanoid robots seem to get particular attention, despite fears that they will someday collectively decide to replace humans entirely, but the little cat robot developed by Yukai Engineering (pvt) in Japan that blows air to cool your food, doesn’t look particularly formidable, although with 15m to 20m cats in Japan, a robotic cat/feline takeover could signal the beginning of the end for humanity.

RSS Feed

RSS Feed