Robot Race...Slower Pace…China’s Chase…Tech Embrace

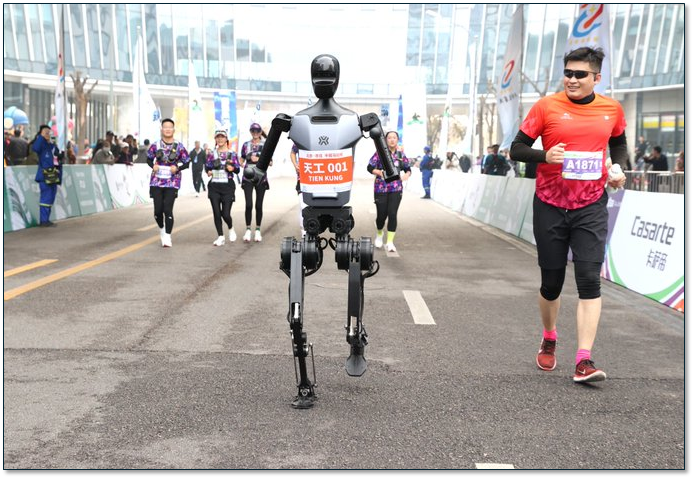

The race was run late last week, with over twenty ‘humanoid’ robots participating in the race that was a showcase for Chinese robotics efforts, another area where the Chinese government has set aggressive goals to compete with the US. There has been a constant barrage of publicity promotions and flowery propaganda over the last few months about how China will become the frontrunner in robotics, leading to concern that the US lead in the space might be diminished, and to some, adding to the fear that humans might one day be replaced by robots. The robots ran in a separate lane and were allowed to be accompanied by ‘handlers’ that ‘ran’ the robots with wireless or wired controllers. The robots were allowed time to replace batteries when necessary and teams were also allowed to replace a faulty robot with another, although incurring a 10 minute penalty.

The robot winner was Tiangong Ultra, who completed the race in 2 hours and 40 minutes, with only three battery changes (and a human with a hand behind Tiangong in case he fell backward) while the human winner finished in one hour and two minutes, but a number of robotics professors and Chinese company officials said they were quite impressed with the fact that many of the robots were able to finish the race, although there were some obvious mishaps as seen in the video below.

This was an interesting show of China’s robotics expertise and while these robots were designed for running and not the acrobatics usually seen in puff pieces on the competition between the US and China. This was a more practical approach to robotics, and based on some of the results not nearly as easy as might be thought. When one thinks about what the mechanics are for a human marathon runner it becomes easier to understand how complex translating that schema into a mechanical device can be. Aside from the obvious individual muscle contractions and the timing of those contractions, their coordination is essential to movement, and small adjustments to muscle movements are essential to maintaining balance and stability on even slightly uneven surfaces.

There is also the need for a constant stream of sensing information, including what is called ‘proprioception’, the human body’s ability to sense its own position , movement, and force in space without relying completely on visual information. While this ‘sixth sense’ is typically referred to in connection with more highly refined skills, such as playing an instrument, it is what makes humans able to touch their nose with their eyes closed or pick up something without looking at it. As this is considered a subconscious sense in humans, it will be a hard one to instill in robots, and without it, it will be hard for robots to adapt to the varying conditions that we face each day. Perhaps when we figure out how it works in us, we will be able to pass the concept on to our new marathon partners.

Figure 1 - Tiangong Ultra FTW - Source: VCG / Visual China Group / Getty Image

Figure 1 - Tiangong Ultra FTW - Source: VCG / Visual China Group / Getty Image

RSS Feed

RSS Feed