AGI

There is only one problem, something called a world view. A world view is a human being’s image of how the world works. It’s not a single image but a collection of information that you starting amassing the second you were born. From those first moments the human mind builds a world model by using sensory information to draw conclusions. A baby eventually understands that if you hold something and then let go, it falls to the ground. The baby doesn’t know what the concept is called, but after a few (or many) things falling to the floor, the baby understands that if I let something go or push it off a table, it will fall to the floor. Simply, our world model is experience based

Humans are also subjective. Baby A will learn that if I let go of something it will drop to the floor and people will come over and make funny noises which are frightening, while Baby B learnsd that if I let go of this it will drop to the floor and people will come over and make funny noises which is funny. Much of what human learn in implicit. It does not always require conscious effort. It doesn’t’ take conscious effort to realize that your feet are going to blister if you walk barefoot on a hot surface. Once it happens you don’t forget, but we also update our world model every second we are alive as our sensory input continues until death.

We are lucky to have the capacity to create a world model that helps us interact with our environment, as without it humans as a species would never have survived. This is also true for animals who have to navigate through their environment by building a world model, albeit a much different one than we might have, although it is based on sensory input and a subjective interpretation of same, learned implicitly, and updated consistently. While animal world models are different for each animal because of their sensory capabilities, they acquire the information and process it the same way we do.

AI systems don’t work the same way. While many in the industry believe AI’s build their own internal world models, they are certainly unlike our own. AI world models are quantitative not qualitative. They are not based on sensory data but are based on numbers that have been labeled (mostly by humans) making the information explicit, and they are limited by the data they are trained on. Of course, the typical response is, if you give them more data to learn on, they will get smarter, but we do not believe that is true because Ai systems do not have the ability to be subjective. If two AIs are based on the exact same algorithms and taught with exactly the same data, they will arrive at the same answers, while humans will not. AIs will certainly find patterns and relationships that we cannot, but unless they are told that a set of numbers represents an object falling to the ground, it is meaningless information.

Credit were due, AI systems are very good at finding relationships, essentially similarities that are extremely subtle. In that way they can recognize that Dr. Seuss used certain words, certain rhyming patterns, certain letters, parts of speech, and other conventions that we don’t recognize. In that way an AI can write ‘in the style of’ Dr, Seuss’, while we need some sort of sensory input to know that hearing “Sam I am” makes us think of Dr. Seuss. But it doesn’t stop there. The AI spits out an 8 line paragraph about a small environmentalist and moves on to the next task, while when we hear or see the word ‘Lorax’ we think of the happy times when that story was read to us as children or when we read that story to our own children. That points to the difference in world models.:

In a world of bright hues, lived young Tilly True, who cared for the planet, the whole day through! With a Zatzit so zappy, and boots made of blue, she’d tell grumpy Grumbles, "There's much we can do!"

"Don't litter the Snumbles, or spoil the sweet air, Let's plant a big Truffula, with utmost of care!" Said Tilly so tiny, her voice like a chime, "For a healthy green planet, is truly sublime!"

We are not criticizing AIs here. They are machines, essentially super calculators that have an almost infinite ability to follow instructions and find patterns but giving them more data doesn’t allow them to build a subjective world model. While AIs can note that the color difference between two pixels in an image are different by 1 bit in a 16 bit number, our sensory (visual) input fits that color into our world view, and we say “Wow, those are beautiful flowers”.

AGI, in our view, would require a huge amount of sensory input and the ability to place that input into a world view that is subjective, and at the moment, we don’t believe that is possible for any AI. AIs can be better ‘pattern recognizers’ than humans and don’t get annoyed or tired, but they cannot ‘see’ or ‘hear’ or ‘touch’ anything and that is what keeps AGI from becoming a reality. JOHO.

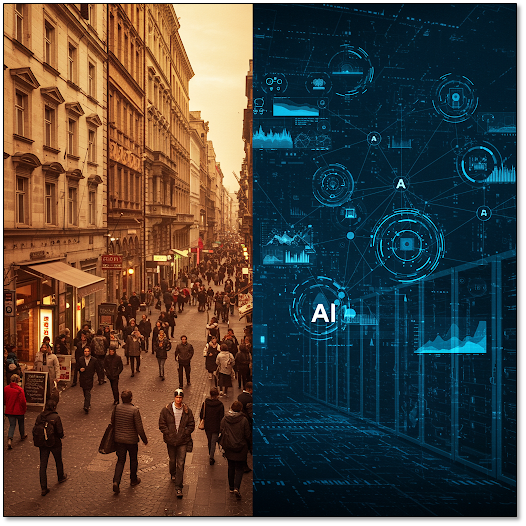

Side Note: Here is the image that we got when we asked Gemini, “How about you come up with an image that represents a human world view on one side and an AI world view on the other?” That has to tell you something, right?

RSS Feed

RSS Feed