Not So Smart

In order for AI to fulfill all the hopes and dreams of its supporters, it not only has to be fast (it is), but it has to be able to work 24/7 (it can), able to learn from its mistakes (sometimes), and has to be correct 99.9% of the time (it’s not). But the business end of AI does not have the patience to wait until AI is able to meet those specifications and has ushered us into the world of AI as a tool for getting a leg on the competition. CE companies are among the most aggressive in promoting AI, and the hype continues to escalate, but the reality, at least for the general public, is a bit less enthusiastic, despite initially high expectations. In a 2024 survey, businesses indicated that 23% found that Ai had underperformed their expectations, 59% said it met their expectations, and 18% said it exceeded their expectations,[2] with only 37% stating that they believe their business to be fully prepared to implement its AI strategy (86% said it will take 3 years), a little less enthusiastic than the hype might indicate.

From a business standpoint the potential issues that rank the highest are data privacy, the potential for cyber-security problems, and regulatory issues, while consumers seem to be a bit more wary, with only 27% saying they would trust AI to execute financial transactions and 25% saying they would trust AI accuracy when it comes to medical diagnosis or treatment recommendations. To be fair, consumers (55%) do trust AI to perform simple tasks, such as collating product information before making a purchase and 50% would trust product recommendations, but that drops to 44% concerning the use of AI support in written communications[3]. Why is there a lack of trust in Ai at the consumer level? There is certainly a generational issue that has to be taken into consideration, and an existential fear (end of the world’) from a small group, but there seems to be a big difference between the attitude toward AI among business leaders and consumers, and a recent YouGov survey[4] points to why.

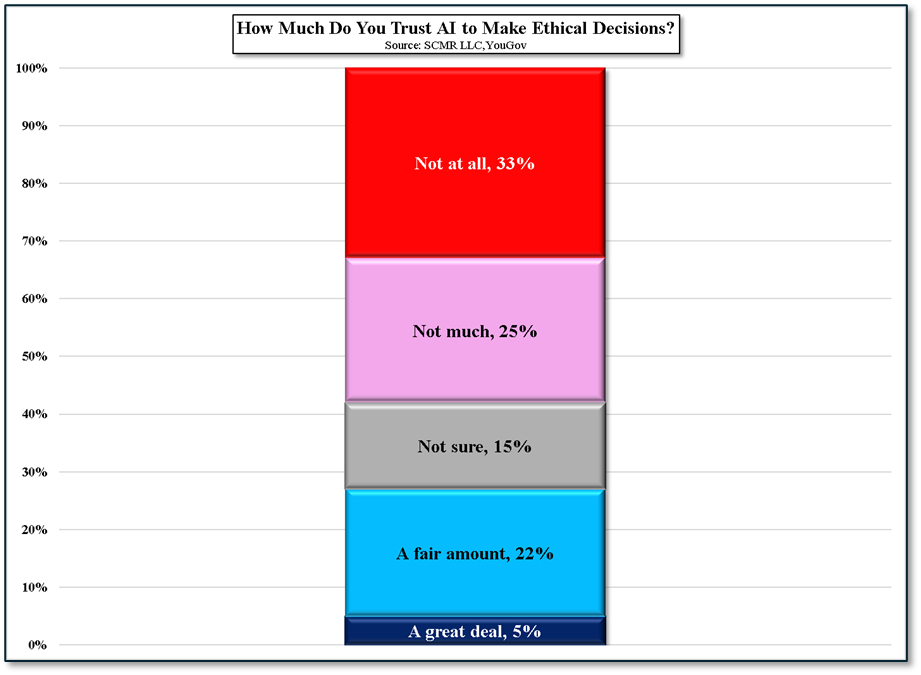

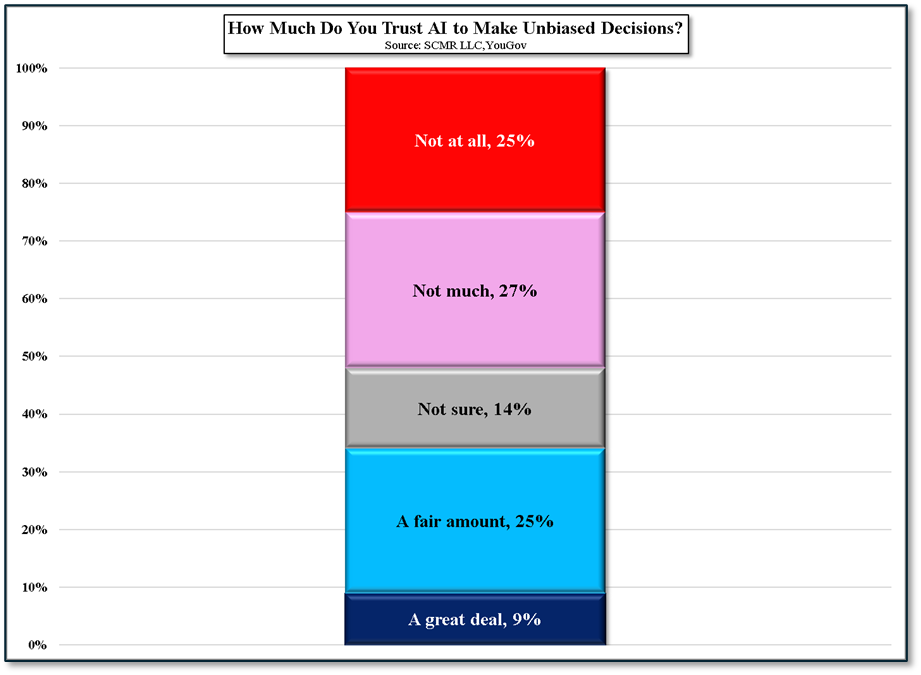

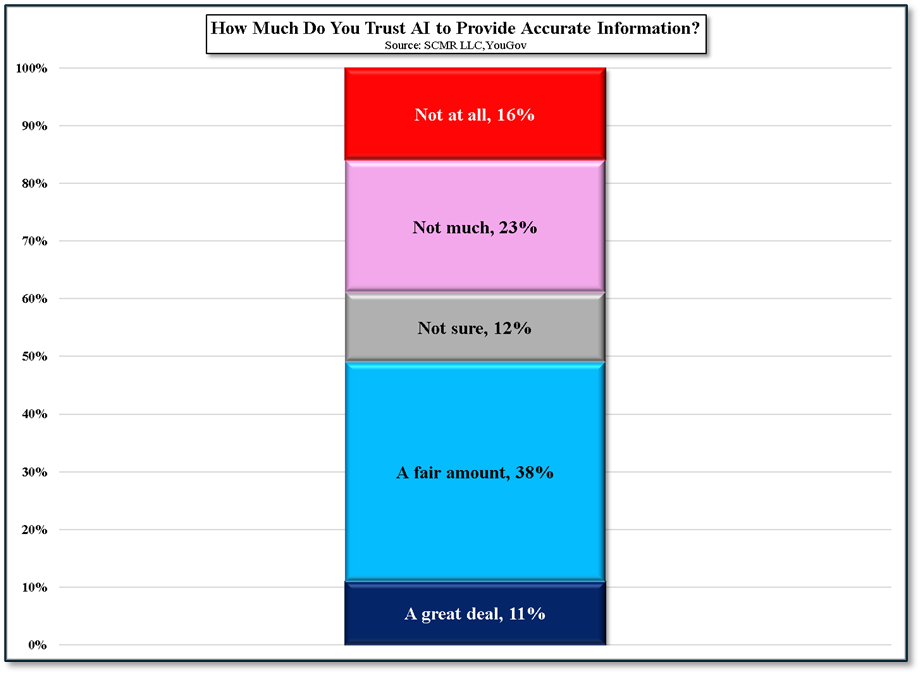

US citizens were asked a number of questions about their feelings toward AI in three specific situations: making ethical decisions, making unbiased decisions, and providing accurate information. Here are the results:

[1] - [1], Fair use, https://en.wikipedia.org/w/index.php?curid=76753763

[2] https://www.riverbed.com/riverbed-wp-content/uploads/2024/11/global-ai-digital-experience-survey.pdf

[3] https://www.statista.com/statistics/1475638/consumer-trust-in-ai-activities-globally/

[4] https://today.yougov.com/technology/articles/51368-do-americans-think-ai-will-have-positive-or-negative-impact-society-artificial-intelligence-poll

LLMs and AI Chatbots have become so important from a marketing standpoint that few in the CE space can resist using them, even if their underlying technology is not fully developed. Even Apple (AAPL), who tends to be among the last major CE brand to adopt new technology, was forced into providing ‘Apple Intelligence’, a brand product that was obviously not fully developed or tested. While Apple uses AI for facial and object recognition, to assist Siri’s understanding of user questions, and to suggest words as you type, there was no official name for Apple’s AI features until iOS 18.1, when the name ‘Apple Intelligence’ was used as a broad title for Apple’s AI. The two main AI functions that appeared in iOS 18.1 were notification summaries, and the use of AI to better understand context in Apple’s ‘focus mode’. iOS 18.2 added Ai to improve recognition in photo selection, gave Siri a better understanding of questions to improve its suggestions, and allowed users to use natural language when creating ‘shortcuts’, essentially a sequence of actions to automate a task, and also enhanced the system’s ability to make action suggestions as the shortcut was being formulated.

None of these functions are unusual, particularly the notification summaries, which are similar to the Google (GOOG) search summaries found in Chrome, but there was a hitch. It turns out that Apple AI was producing summaries of news stories that were inaccurate, with the problem becoming most obvious when Apple’s AI system suggested that the murderer of United Healthcare’s CEO had shot himself, causing complaints from the BBC. Apple has now released a beta of iOS 18.3, that disables the news and entertainment summaries and allows users to remove summary functions on an application-by-application basis. It also changes all AI summaries to italics to make sure that users can identify when a notification is from a news source, or is an Apple Intelligence AI generated summary.

While this is an embarrassment for Apple, it makes two points. First, AI systems are ‘best match’ systems. They match queries against what their training data looked like and try to choose the letter or word that is most similar to what they have seen in their training data. This is a bit of an oversimplification, as during training the AI builds up far more nuanced detail than a letter or word matching system (think “What would be the best match in this instance, based on the letters, words and sentences that have come before this letter, or word, including those in the previous sentence or sentences?”), but even with massive training datasets, AI’s don’t ‘understand’ more esoteric functions, such as implications or the effect of a conclusion, so they make mistakes, especially when dealing with narrow topics.

Mistakes, sometimes known as hallucinations, can be answers that are factually incorrect or unusual reactions to questions. In some cases the Ai will invent information to fill a data gap or even create a fictionalized source to justify the answer, even if incorrect. In other cases the Ai system will slant information to a particular end or sound confident that the information is correct, until it is questioned. More subtle (and more dangerous) hallucinations appear in answers that sound correct on the surface but are false, making them hard to detect unless one has more specialized knowledge of a topic. While there are many reasons why AI systems hallucinate, AI’s struggle to understand the real world, physical laws, and the implications surrounding factual information. Without this knowledge of how things work in the real world, AIs will sometimes mold a response to its own level of understanding, coming up with an answer that might be close to being correct but is missing a key point (Think of a story about a forest without knowing about gravity…” Some trees in the forest are able to float their leaves through the air to other trees…”. Could it be true? Possibly, unless there is gravity)

Second, it erodes confidence in AI and can shift consumer sentiment from ‘world changing’ to “maybe correct’ and that is hard to recover from. Consumers are forgiving folks and while they get hot under the collar when they are shown that they are being ignored, lied to, or overcharged, brands know enough to lay low for a while and then jump back on whatever bandwagon is current at the time, but ‘fooled once, fooled twice’ can take a while to dissipate. AI will get better, especially non-user facing AI, but if consumers begin to feel that they might not be able to trust AI’s answers, the industry will have to rely on the enthusiasm of the corporate world to support it and given the cost of training and running large models, we expect they will need all the paying users they can find. Don’t overpromise.

RSS Feed

RSS Feed