Nth Dimension

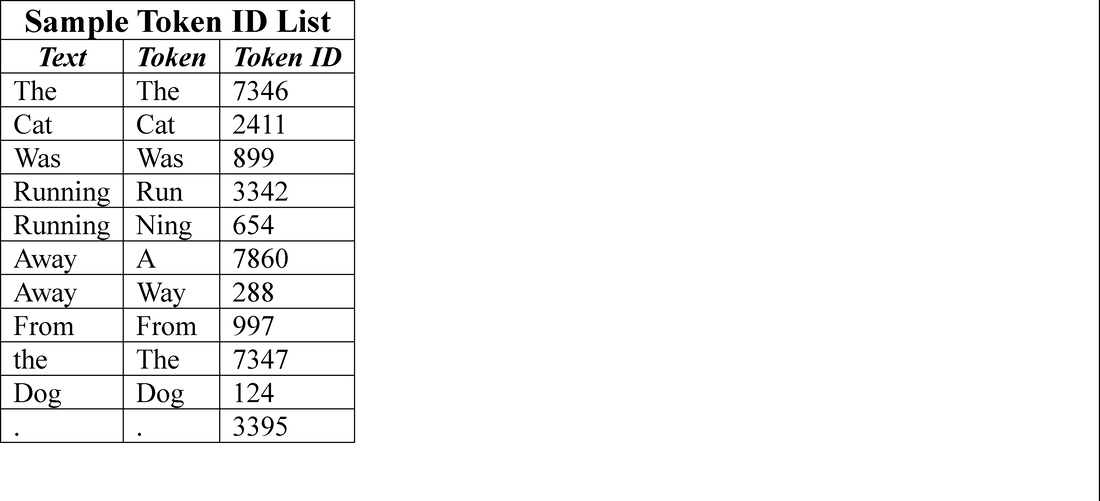

What we were trying to understand when we started our conversation with ChatGPT was how models represent information for each token as it learns. We understand that the model (software called a tokenizer) breaks down text into tokens, typically a token for each word, although in many cases it can be a sub-word, such as a syllable or even a single character. Each token gets assigned an ID number which goes into a master token ID list.

Example:

“The cat was running away from the dog.”

The list of unique tokens for a large model is fixed at ~100,000 tokens. No matter how much data the model sees it only uses tokens from this list, breaking down unknown words into smaller known sub-word pieces, so the corpus of data the model sees could be 300 billion tokens. The token ID list remains with the model after training, but the large list of tokens processed during training does not need to be stored, as the model learns from the tokens but does not need them later.

The part that is difficult to visualize comes as the tokens are first encountered by the model. The model looks up the token in the token list and matches it to another list that contains that tokens vectors. Think of vectors as a string of numbers (768 numbers for each token in a small model)

While this is a very simplistic look at how an LLM learns, one should understand that the model is always looking at the relationships between tokens, particularly in a sequence, and with over 700 vector dimensional ‘characteristics’ for each token, the model can develop lots of connections between tokens. It is hard not to think of the dimensions as having specific ‘names’ as the semantic information that the dimensions contain is quite subtle, but it is all based on the relationships that the tokens have to each other, which is ‘shared’ in token vectors.

All in, this is just the tip of the iceberg in terms of understanding how models work and their positives and negatives, although even the best of LLNs still has difficulty explaining how things work internally when the questions are highly specific. Sometimes we think its because it doesn’t really know how it works and other times it seems that it just doesn’t want to give that proprietary detail. But we will continue to dig and pass on what we find out and how it affects AIs and their use in current society. More to come…

RSS Feed

RSS Feed