The Physical World

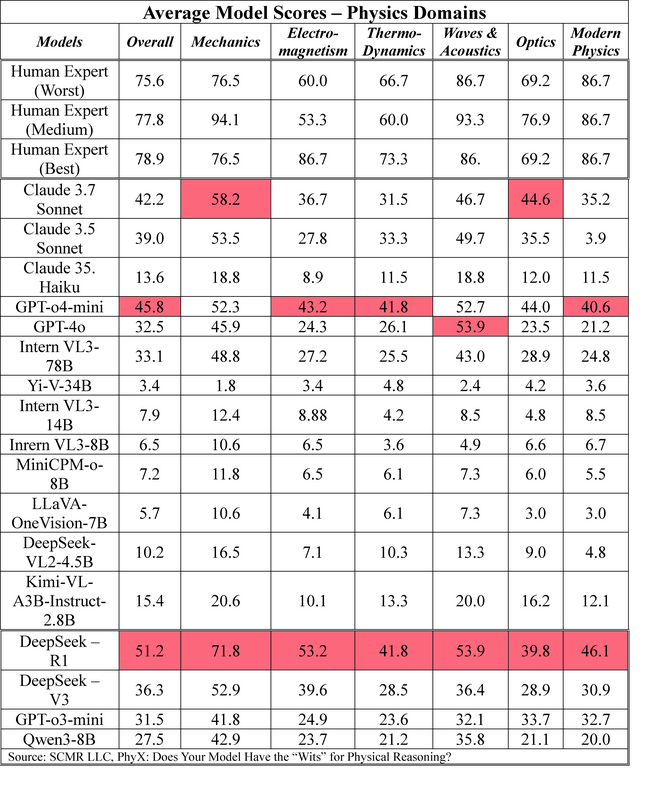

The use of the word ‘reason’ is really a stretch even as the definition “think, understand, and form judgments by a process of logic”, is correct in the ‘logic’ part, but far off on ‘think’ and ‘understand’.There is no understanding, just the ability to use those relationships learned during training to come up with the most logical answer. This becomes very apparent when it comes to physics, as much in physics requires physical reasoning., and it seems that big models have considerable difficulty correctly solving physics problems. A group of researchers at the University of Michigan, University of Toronto, and the University of of Hong Kong, decided to create a group of 6,000 physics questions to see if models were up to the task when it comes to physics, even though the same models were able to solve Olympiad mathematical problems with human level accuracy on standard benchmarking platforms.

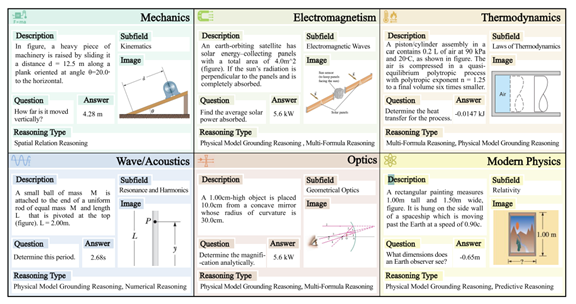

The researchers used 6 physics domains: mechanics, Electromagnetism, Thermodynamics, Wave/Acoustics, Optics, and Modern Physics, and before we go further, we were quickly humbled upon seeing even the simplest of the 3,000 questions. That said, physical problem-solving fundamentally differs from pure mathematical reasoning or science knowledge question answering by requiring models to decode implicit conditions in the questions (e.g., interpreting "smooth surface" in a question as the coefficient of friction equals to zero), and maintain physical consistency as the laws of physics don’t change with different reasoning pathways. There is a need for visual perception in physics that does not appear in mathematics and that presents a challenge for large models and the new benchmark that the researchers developed is not only 50% open-ended questions, but has 3,000 unique images that the model must decipher.

We cannot answer why the models did not fare well, but we can give some understanding to the type of errors that were found:

- Visual Reasoning (39.6%) – An inability of the model to correctly extract visual information.

- Text Reasoning Errors (13.5%) – Incorrect processing or interpretation of textual content.

- Lack of Knowledge (38.5%) – Incomplete understanding of specific domain knowledge.

- Calculation Errors (8.3%) – Mistakes in arithmetic operations or unit conversions.

All in, typical benchmarks overlook physical reasoning, and that requires integrating domain knowledge and real-world contraints, difficult tasks for models that don’t live in the real world. Relying on memorized information, superficial visual patterns, and mathematical formula do not generate real understanding. While the researchers note that while schematics and textbook style illustrations might be suitable for evaluating conceptual reasoning, they might not capture the complexity of perception in natural enviroments. You have to live it to understand it.

RSS Feed

RSS Feed