Will AI Cause the End of Social Media?

In order for models to increase their accuracy, they need more examples. This could mean more examples of text from famous authors, more annotated images of gazebos, dogs, ships, flagpoles, or more examples of even more specific data, such as court cases or company financial information. Current Large Language Models (LLM) are trained on textual and code datasets that contain trillions of words, but the constantly expanding sum total of human text is loosely estimated in the quadrillions, so even a massive training dataset would represent less than a 10th of a percent of the corpus of human text. It would seem that the chance that model builders will run out of data for training models would be something of concern far in the future, but that is not the case.

Models are now able to scrap information from the internet, which is eventually added to its training data when fine-tuned or updated. The next iteration of the model is trained recursively, using the previous models’ expanded dataset, so Model V.2 generates output based on model V.1’s original training data and what it found on the internet. Model V.3 uses model V.2’s expanded dataset, including what it finds on the internet to derive its own output, with subsequent models continuing that process. This means that while model V.1 was originally trained on ‘reality, adding data from the internet, which we might loosely call ‘mostly realistic’ taints that models output slightly, say from ‘realistic’ to ‘almost completely realistic’. Model V.2’s input is now ‘almost completely realistic’ but its output is ‘mostly realistic’ and with that input for the next iteration, model V3, its output is ‘somewhat realistic’.

Of course these are illustrations of the concept, but they do represent the degenerative process that can occur when models are trained on ‘polluted’ data, particularly data created by other models. The result is model collapse, which can happen relatively quickly as the model loses information about the distribution of its own information over time. Google (GOOG) and other model builders have noted the risk and have tried to limit the source of internet articles and data to more trustworthy sources, although that is a subjective classification, but as the scale of LLMs continues to increase the need for more training data will inevitably lead to the inclusion of data generated from other models and some of that data will come without provenance.

There is the possibility that the AI community will coordinate efforts to certify the data being used for training, or the data being scraped from the internet, and will share that information. But at least at this point in the Ai cycle, model builders cannot even agree what data needs to be licensed and what does not, so it would seem that adding internet data will only hasten the degradation of LLM model effectiveness.

How does this affect social media? It doesn’t. Social media has a low common denominator. The point of social media is not to inform and educate, it is to entertain and communicate, so there will always be a large community of social media users that don’t care whether what they see on social media is accurate or even real, as long as it can capture attention for some finite period of time and possibly generate status for the information provider, regardless of its accuracy. Case in point the ‘Child under the Rubble’ photo we showed recently or the image of the Pentagon on Fire that was circulated months ago, both of which were deepfakes.

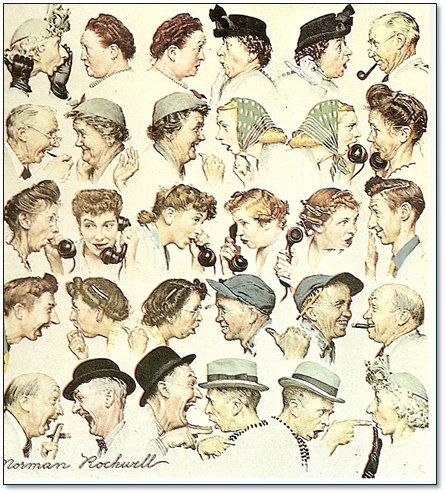

In fact, we believe it is easier to spot deepfakes than it might be to spot inaccuracies or incorrect information from textual LLMs, as specific subject knowledge would be required for each LLM output statement. It is a scary thought that while the industry predicts that model accuracy will continue to improve until models have the ability to far surpass human response accuracy, there is the potential that models will slowly (or rapidly) begin to lose sight of the hard data on which they were originally trained as it becomes polluted with less accurate and less realistic data; sort of similar to the old grade school game of telephone. If that is the case, social media will continue but the value of LLMs will diminish.

RSS Feed

RSS Feed