The Wall

China’s mindset is a bit different than others in that for whatever social, political, or cultural reason, both the Chinese government and the populous take the actions of the US, even if they might be justified from a military perspective (the same in the US), as a personal affront. It might seem odd that the population of China, living under a totalitarian government, would have nationalistic pride, but Chinese culture is hundreds of years old and the country has been run by many regimes with a variety of political views, and remains fiercely protective and patriotic. In fact, it seems that the more the country is pushed by outside sources, the more it pushes back. Sort of a “You think your better than me? I’ll show you!”

Lisuan Technology (pvt), a 4 year old Chinese semiconductor company, founded by a team of former Silicon Valley professionals, has announced that it had successfully tested its high-performance GPU, the G100. The device differs from other Chinese developed GPU models in that it does not use licensed GPU technology and do others, having designed the technology from the ground up itself. Making the device stand out further is that it is thought to have been produced on a 6nm node, likely manufactured by China’s leading foundry SMIC (981.HK), with the performance target being Nvidia’s (NVDA) GeForce GTX 4060, a popular mid-range graphics card. Details are thin and the mass production commercialization of the G100 is still a year off, but the fact that China is able to get close to producing a self-designed GPU competitor is a significant step for China’s semiconductor industry, especially at 6nm..

That said, it has not been an easy path for Lisuan Technology, with the company getting close to bankruptcy last year until parent Dosin Semiconductor (pvt) bailed it out with a $27.7m capital infusion that has allowed the company to get to this point. From here drivers have to be optimized and both hardware and software has to be verified, with a small number of units commercially available in 3Q of this year. We expect that the performance of the G100 will need to be developed further to actually compete with the GTX 4060, but even with the financial difficulties and delays that Lisuan Technology has faced, the fact that they were able to get close to producing a homegrown GPU that seems to be competitive, is a major accomplishment, even with the US restrictions. China’s ‘Can do” attitude seems to have paid off more than expected and given China a way into this very lucrative market.

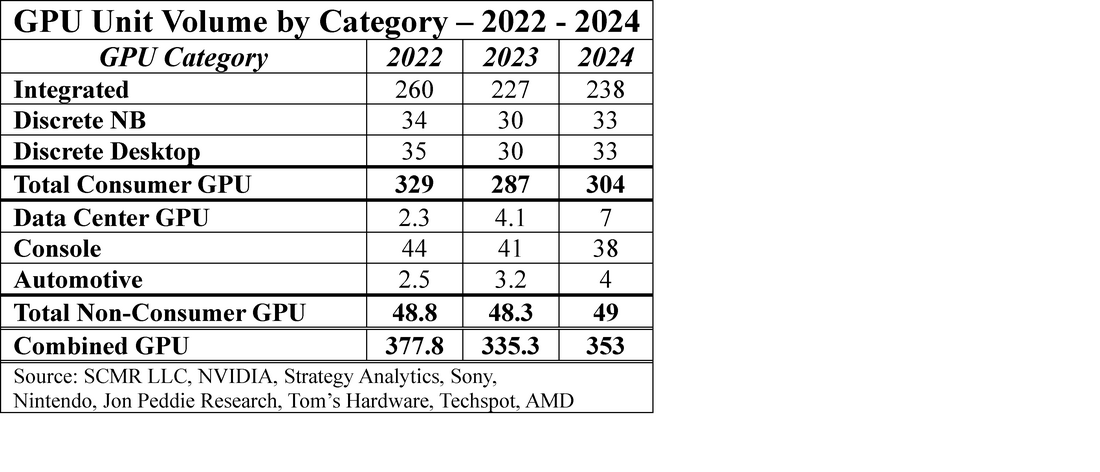

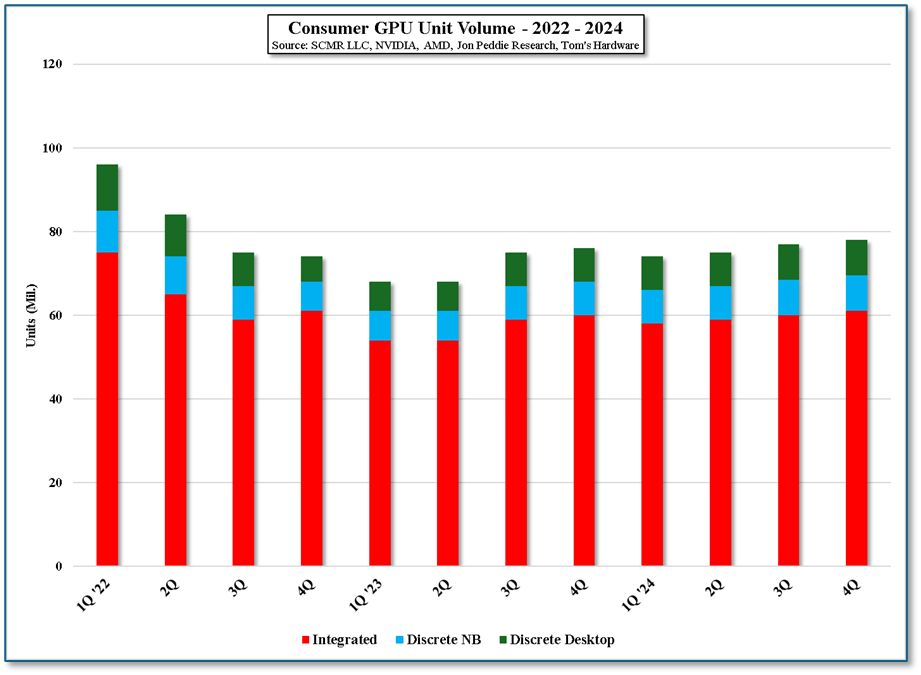

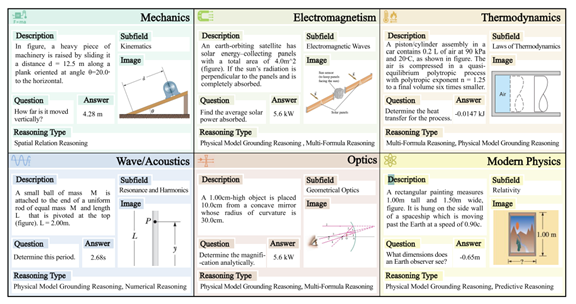

Before the AI craze, GPUs were actually used for graphics processing, converting the information sent from the CPU to data formatted for the display. This is done through a pipeline that includes shaders, which transforms 3D coordinates into 2D projections using scaling, rotation, and translation, all mathematical computations, with primitives (points, lines, shapes) being assembled into fragments and then given additional attributes like color or lighting, again using mathematical transformations. Given the number of pixels in a common 4K display (8,294,400), each with three (RGB) sub-pixels, and a refresh rate of 60 or 120 times per second, the GPU pipelines are designed to perform a large number of calculations at a very high rate of speed.

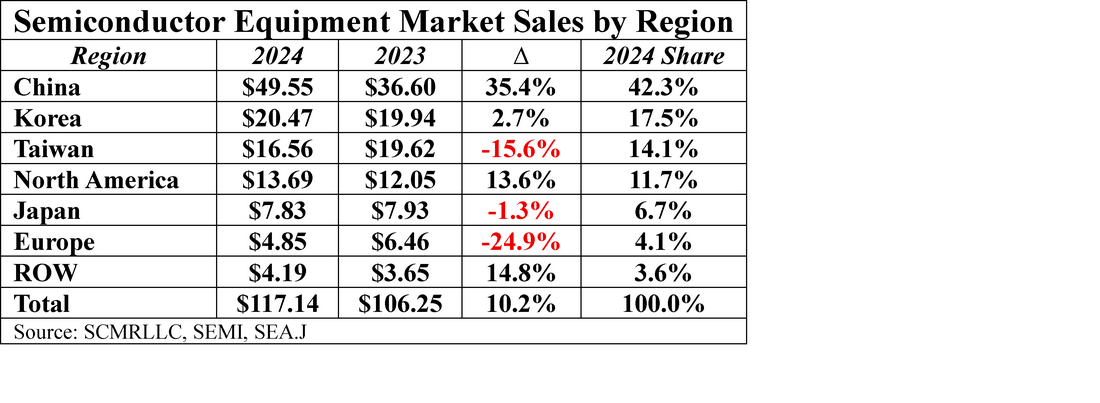

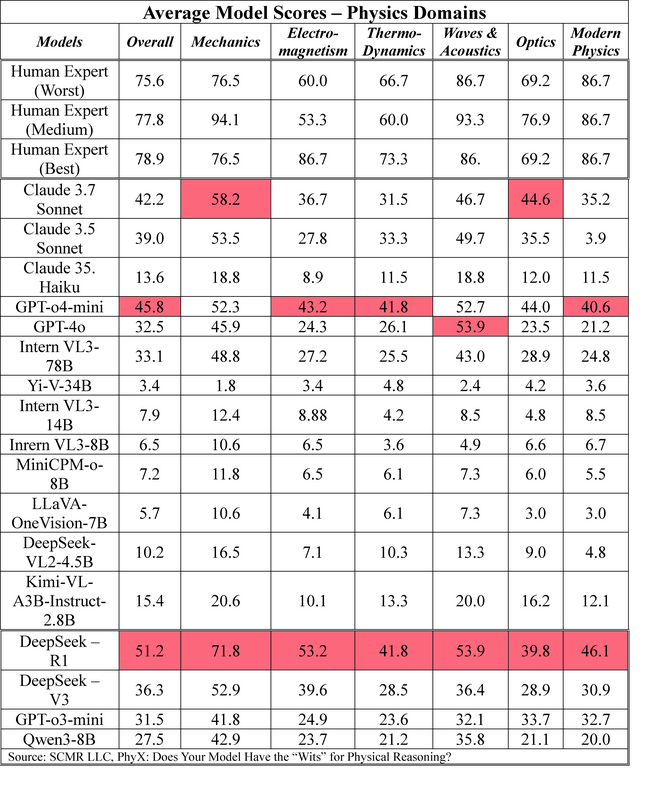

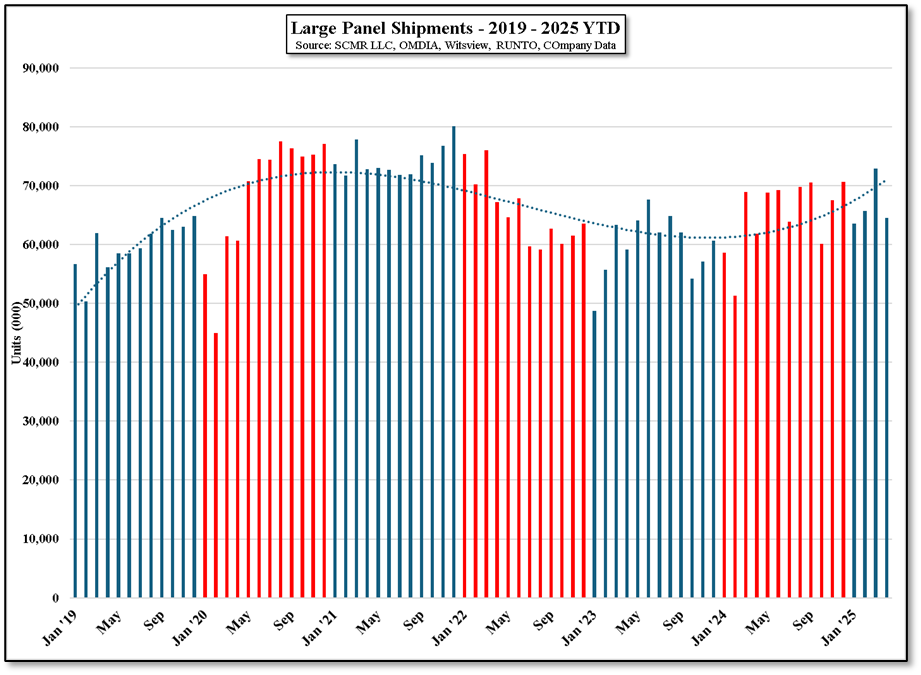

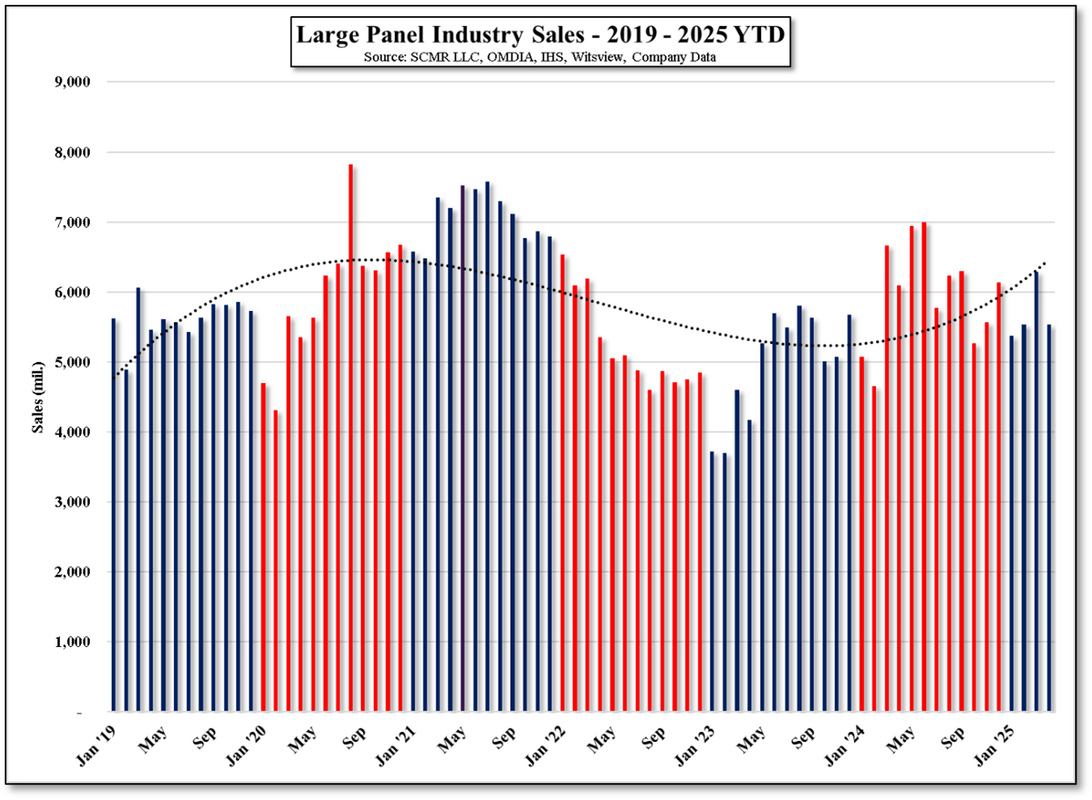

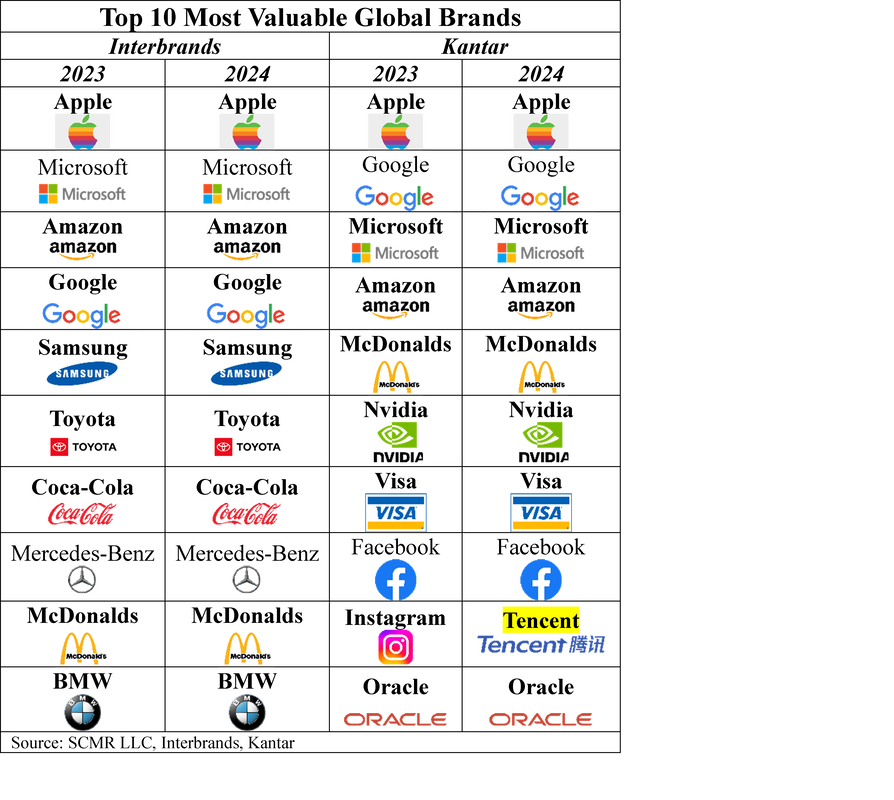

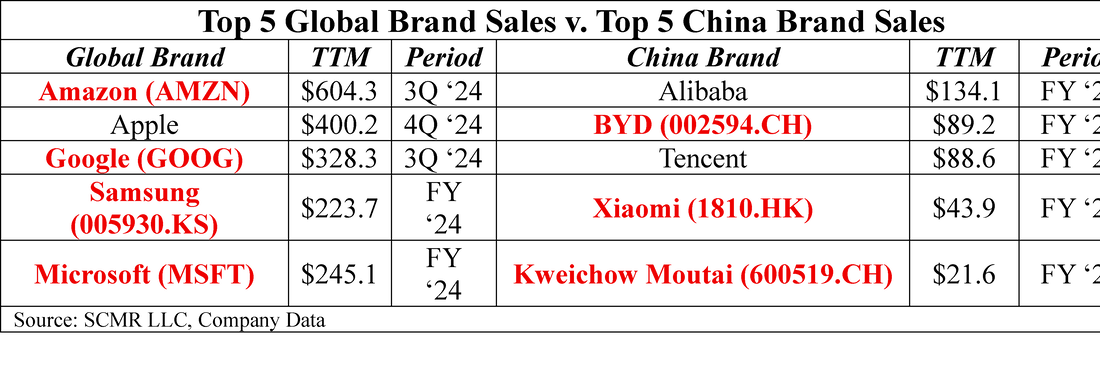

As Ai systems require that same basic process of a large number of parallel calculations done at a very high rate of speed, GPUs are the basic unit behind Ai model training and inference systems. Obviously more sophisticated than a laptop GPU that might be a card similar to the Nvidia model mentioned above or an integrated GPU that is part of an Intel (INTC) or AMD (AMD) CPU chipset, data center GPUs are still just very high performance calculators in a market that is currently dominated by Nvidia, Intel, and AMD. There is rather limited unit volume data on the overall GPU market that includes all GPU types, but form the data we collected, one can see that it is a very lucrative market on unit volumes alone. Given that the primary GPU producers are US companies, the US government has been severely limiting China’s access to high performance GPUs, pushing companies like Lisuan Technology to develop their own GPU technology. It seems that China has found a way over the wall.

RSS Feed

RSS Feed