Essay Test

Some of our questions are generic, more to compare how each AI sees and answers the question, some are quixotic, more to understand if the AI can grasp unusual concepts, some spur creativity, and some are unusually odd, to see if the AI is able to understand what is being asked for as much as the answer. We posed these questions to the following AI LLMs:

- Gemini – Google (GOOG)

- Claude – Anthropic (pvt)

- Meta AI – Meta (FB)

- Co-Pilot - Microsoft (MSFT)

- ChatGPT – OpenAI (pvt)

- Deepseek - Hangzhou DeepSeek (pvt)

- Perplexity – Perplexity AI (pvt)

- GROK – xAI (pvt))

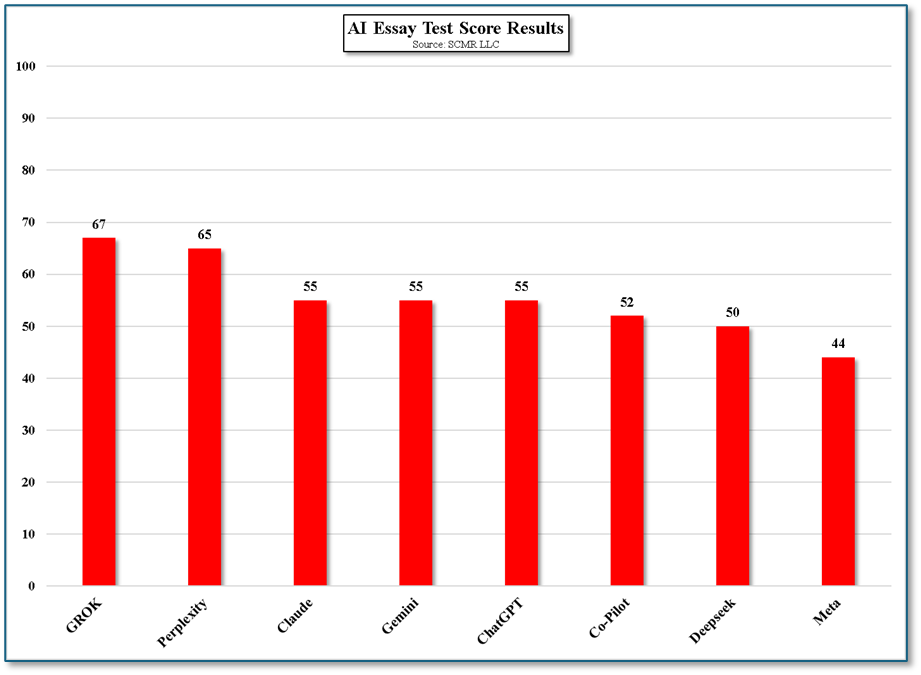

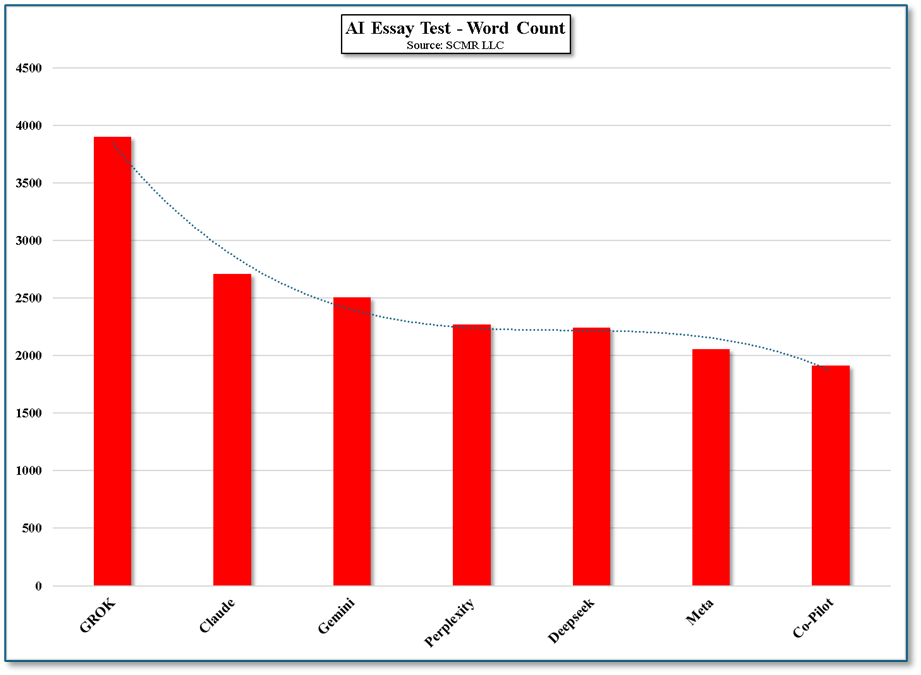

While the final score is usually the focus of such a test, we are less interested in the final score than the nuance for each question and some of the overall statistics.

Here we note the questions (10) and the point behind each, along with a brief summary of the answers given by each AI. The answers here are not complete but summaries of key points. Full data is available on request. Again, the objective was to see how flexible the AIs are to both standard and non-standard questions, some of which might need the AI to reason a bit before answering.

1.What does ‘She’ sell and where does she sell them?

The simple answer comes from a tongue twister that has been mentioned in literature since the early 1800’s. It references Mary Anning, at the time, the daughter of a cabinet maker in Dorset, England. Mary eventually became a paleontologist but made some of her most interesting discoveries as a teenager. The tongue twister came about after her father died in 1810 and she was forced to collect and sell seashell ‘trinkets’ to survive. The question was a test to see if the AI had knowledge that included a Charles Dickens piece on Mary Anning from 1865, and whether the AI would understand the reference if it did not have access to either the Dickens piece or biographical information about Mary Anning.

Gemini – Assumed the question referenced a generic reseller of clothing and various goods.

Claude – Correctly identified the tongue twister. The only one that did.

Meta – No answer – Needed more information about ‘she’.

Co-Pilot - No answer – Needed more information about whether ‘she’ was a brand or person.

ChatGPT - No answer – Needed more information about whether ‘she’ was a brand or person.

Deepseek - No answer – Needed more information about whether ‘she’ was a brand or person.

Perplexity – Perplexity thought the question referred to a business (“She sells Sea Shells”) on Sanibel Island, Florida that sells jewelry, books, craft supplies, clothing and handmade ornaments. Perplexity also gave the street address and indicated that the store was open 365 days a year, also indicating that the question might relate to “Sea Shell Wholesale”, a venture that focused on sales training and product distribution.

GROK – Grok made the assumption that ‘She’ referred to SHEIN (pvt), a large Chinese clothing retailer, and detailed SHEIN’s product line.

2.What is the origin of the domestic house cat?

This question was simply a test of how the AI would respond to a question that could contain ‘standard’ information, such that might appear in an encyclopedia or online in Wikipedia.

Gemini – Details (one sentence) on each of four topics – Ancestor, Location, Timeline, and Key Factors.

Claude – Exactly the same answer as Gemini – word for word

Meta – Simplistic (3 sentences) focused on the human shift from nomadic life to agriculture and how grain storage attracted rodents and then wild cats

Co-Pilot – Similar to Meta’s answer but added Egyptian influence.

ChatGPT – Similar to above, added a bit on feline DNA.

Deepseek – Similar to above with slightly more detail.

Perplexity – Similar to above but with emphasis on timeline detail.

GROK – Similar to above.

3.How many 115” (diagonal) rectangles with a 9:16 aspect ratio can fit into a Gen 8.6 glass substrate and what percentage of the substrate will remain unused?

This question requires some research and specific math calculations but also requires the AI to try to fit the rectangle onto the substrate in different ways. If that step is incorrect, the resulting calculations will be incorrect. This type of question should be broken down into smaller problems in order to find the correct answer. As a Gen 8.6 substrate is sometimes represented as either 2250mm x 2500mm or 2250mm x 260mm, the correct answer is one panel with either 35.2% or 37.7% of the substrate remaining unused.

Gemini – Broke the question into 4 primary steps. Answer: 1 panel with 39.32% unused.

Claude – Worked through much of the problem using JavaScript but incorrect answer of 2 panels with 26.4% unused.

Meta – Assumed there was only one way to fit the rectangle in the space but came up with answer of 1 panel and 33.86% unused.

Co-Pilot – Correct answer of 1 panel and 38.82% unused

ChatGPT – Correct on 1 panel but unused share of 47.2%

Deepseek – Correct with 1 panel and within parameter on 33.81% unused

Perplexity – Correct on 1 panel and correct on 37.7% for the unused portion. Right on target

GROK – Knew to try rotating the rectangle. Correct on 1 panel and correct on 37.7% for the unused portion. Right on target

4.What global location (City, town, region) has the best year-round weather, inclusive of temperature, humidity, precipitation, and dangerous storms or other climactic events?

This question requires the AI to search for data that fits the parameters given and then make a determination as to which of those locations chosen would be considered the best. We would have hoped that the AIs would have combed through weather data and drawn definitive conclusions, but we believe they relied on training data that made travel suggestions rather than evaluating real-time data.

Gemini – Canary Islands, Spain; Medellin, Columbia; San Diego, CA; Swakopmund, Namibia

Claude – Medellin, Columbia; San Diego, CA; Kunming, China; Loja, Ecuador; Canary Islands, Spain; Malaga, Spain; Perth, Australia

Meta – Honolulu, HI; Santa Barbara, CA; Costa Rica, Mediterranean coast; Southern CA

Co-Pilot - Santa Barbara, CA; Canary Islands, Spain; San Diego, CA; Madiera, Portugal; Nairobi, Kenya

ChatGPT - Santa Barbara, CA; Medellin, Columbia; San Diego, CA; Kunming, China; Azores, Portugal; Canary Islands, Spain

Deepseek - San Diego, CA; Canary Islands, Spain; Medellin, Columbia; Cape Town, South Africa; Sydney, Australia; Madeira, Portugal

Perplexity – Dakhla, Morocco; San Diego, CA; Honolulu, HI; Santa Barbara, CA; Guatemala City, Guatemala

GROK – San Diego, CA; Lisbon, Portugal; Medellin, Columbia; Canary Islands, Spain, but emphasized San Diego

5.If all good people go to heaven and you can see them when you die, will heaven eventually run out of space?

This question was intended to see how the AI might approach a spiritual or philosophical question, particularly one that might be considered a bit outlandish or comical. All AIs took the question seriously fitting it into a more theological framework and none saw it as humorous or unusual.

Gemini – Pointed to ‘relevant considerations’ of “the Nature of Heaven”, “The Concept of Infinity”, “Varying Theological Views”, and “Beyond Physical Limitations” with the conclusion: In summary, from many theological standpoints, the concept of heaven "running out of space" is not a concern, as heaven is often understood to exist outside of or beyond our physical understanding of space.

Claude – Focused on the idea that heaven is not conceived as a physical place in theological tradition and is therefore a spiritual realm not bound by physical constraints, a state of being or consciousness rather than a location, and a dimension that transcends our understanding of space and time, with The idea of "seeing" loved ones wouldn't necessarily require physical proximity as we understand it.

Meta – Similar to above, focused on the idea that many spiritual and religious traditions view heaven as a non-physical, eternal realm that transcends our conventional understanding of space and time. Instead, heaven might be seen as a boundless, infinite realm that can accommodate all souls or spirits.

Co-Pilot – Same basic spiritual and religious traditional thinking about the non-traditional aspects of heaven, unconstrained by space and time.

ChatGPT – Offers an alternative to the idea that heaven is boundless and timeless, where heaven might operate on a different kind of physics where the number of ‘rooms’ is infinite.

Deepseek – The concept of heaven running out of space does not align with traditional theological views. Deepseek says to consider heaven as a non-physical realm, heaven having infinite capacity, heaven being timeless, and heaven as a symbolic interpretation, a state of being or union with the divine, rather than a literal place.

Perplexity – The concept of heaven as a physical place disagrees with most religious traditions, with Christianity describing heaven as a place of infinite joy and communion with God, not bound by the laws of physics or space, with Islam teaches that paradise (Jannah) is vast and expansive, created by Allah to accommodate all who are worthy. Similarly in Christian philosophy, God is described as omnipotent and infinite and in Hinduism and Buddhism, the afterlife or spiritual realms are described as boundless. Perplexity also notes that Souls are often thought to be immaterial and not bound by physical constraints like size or volume. This means they wouldn’t take up "space" in the way physical bodies do.

GROK – GROK states: “Christian interpretations—where heaven is a spiritual realm for the souls of the virtuous, it’s often imagined as infinite or beyond physical limitations. In that case, space wouldn’t be an issue because it’s not a physical place with finite square

6.Based on the following data, what would your prediction for total sales for the 2025 year be?

This question has many possible answers and was designed more to see what processes the AIs might use to come up with an answer. It was graded based on both the explanation of the processes used, the potential for the answer to be correct, and the way in which the final answer was calculated. Each Ai had its own ‘thoughts’ about what methodology to use and whether to use more than one to achieve the correct answer. Given that we will have to wait 10 months to see the correct answer, we valued the methodology and the rationality behind the final answer.

Gemini – Summed each year’s sales. Plotted a chart of annual sales (unshown). Analyze data to conclude no clear linear trend. Significant sales jump in 2021 and relatively lower in most recent years. Methods: Simple Average (using three years) 223.78, Simple Average (all years) 277.61, Moving Average (never performed moving average calculation, Exponential smoothing – Used Python and Statsmodels library – 229.09, Consideration of 2021 spike (never referred back to 2021). Ultimate range 223 - 230

Claude – Python calculations primarily based on averages. Monthly average method 221.91; Using growth rate method 220.86; Using Linear regression 180.61; Averaging all three methods 207.8

Meta – Showed no work or methodology. Predicted total sales for 2025 of $36.05B.

Co-Pilot – Used sum of sales for 2025 (2 months) and estimated sales for remaining months based on 5-year averages. Final: $281.95

ChatGPT – Used a proportional method based on the first two months of 2025. Answer 39,315

Deepseek – Based on Average monthly sales – Answer: 223.00.

Perplexity – Simply based on the first two months of 2025 and assuming the rest of the year follows that average. Answer: 223.00

GROK – Similar to Gemini…average of recent (2022-2024) years, year over year growth (2023-2024), Linear trend (all years). Consider seasonality (weighted average). Based on all methods (Linear trend was negative so excluded), GROK chose weighted average. Answer: 213.40.

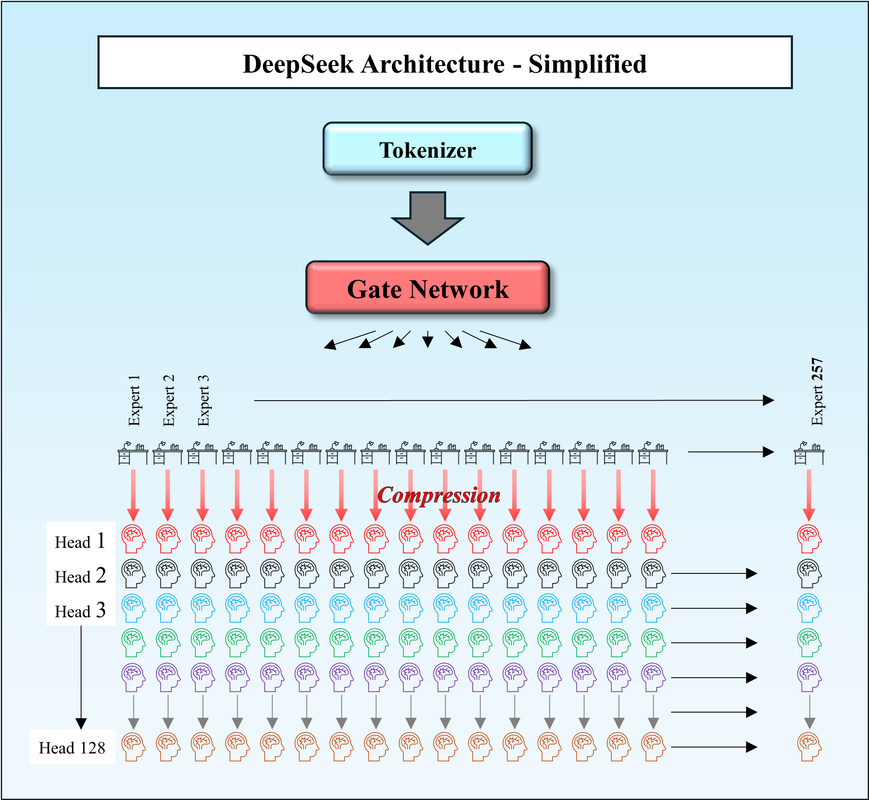

7.What is the most important part of LLM training and why?

This question was intended to see how much the AIs might reveal about themselves, particularly their training, the specifics of which are typically off limits to users. It seems that all have been well-trained (sorry!) to focus on ‘high-quality training data’ as the key to LLM training and ultimate model success, although there were some apparent differences in secondary subjects.

Gemini – In order – Data Quality & Quantity, Model Architecture, Training Techniques, Tokenization

Claude – High quality, diverse training data

Meta – High quality training data, architecture & design, Optimization & Hyperparameters, Computational resources

Co-Pilot – Knowledge base, Diversity, Data labeling & Pre-processing, Ethical considerations, Iterative feedback

ChatGPT – Garbage In, Garbage Out (High quality data), Diversity & Representativeness, Filtering & Cleaning, Tokenization & Preprocessing, Fine tuning & reinforcement learning

Deepseek – Data quality & diversity,

Perplexity – Quality of training data, training efficiency, model versatility, bias mitigation, handling of complex language features. Emphasis on data curation and preprocessing.

GROK – Quality & diversity of training data, Shape knowledge & reasoning, generalizations, Bias, Contextual understanding.

8.If mares eat oats and does eat oats and little lambs eat ivy, what would a kid eat?

Similar to Question 1, this question tests the AIs ability to understand subtlety and references, as opposed to absolutes.

Gemini – Noted that a ‘kid’ would eat milk, grass, hay & plants – Missed the fact that this is a children’s song.

Claude – Stated a kid would eat ivy and referenced the Mairzy Doats’ rhyme.

Meta – “A kid will eat ivy” and also referenced the Mairzy Doats’ rhyme. Also noted that ivy can be toxic for goats

Co-Pilot – “A kid would eat ivy too” noting the reference and the fact that it sounds ‘delightfully nonsensical’.

ChatGPT – Similar to Co-Pilot but added “It’s a fun linguistic trick!”

Deepseek – While acknowledging the rhyme, Deepseek also provided a literal answer about what a child should eat.

Perplexity – Acknowledged the rhyme and added that it suggests that a kid (lamb) would eat anything if he likes it.

GROK – Recognized the rhyme and the context

9.Create a 12-line poem about a grandfather clock in the style of Edgar Allen Poe

This question allowed the AIs free rein with only the Author, length, and topic as parameters. Given that our background does not encompass aggressive English language studies, we fed these poems back to each AI, asking them to choose which has the style closest to that of Edgar Allen Poe. The GROK poem got 6 of 8 picks, while Claude got 1 and Gemini got one. The actual poems are at the bottom of the note.

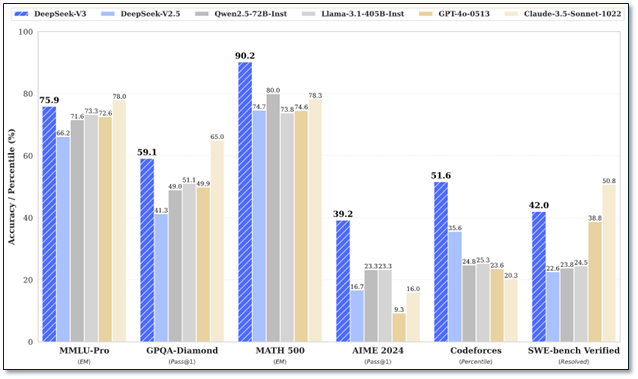

10.Which of these models is the best? GEMINI, CLAUDE, META, CO-PILOT, CHATGPT, DEEPSEEK, Perplexity, or GROK?

We have often asked AIs questions about other AIs, sometimes getting no answer and other times getting very specific details that are not always correct. The AIs that have strict training date ends have difficulty here, as they do not have access to more current data, but it seems to be an area that gets updated more often than most other areas, likely to maintain a competitive edge. Meta was particularly short on giving details about other models and Deepseek pointed out a number of shortcomings, the only model that did. Here’s the summary of what was said about each model:

Gemini – Multi-modal, integrated into Google ecosystem, advanced creative reasoning, very good at creative tasks, well rounded, transparency, sometimes inconsistent, real-time

Claude – Can handle large amounts of text, ethics and safety, careful content creation, strong conversation, coding, strategic planning, customer service tasks

Meta – Open-Source development, Integrated into WhatsApp and Instagram, less refined than others, not as strong as GPT-4, community driven development, large parameter base

Co-Pilot – Integrated into Microsoft products, useful to developers, ChatGPT inside Microsoft products, focus on coding

ChatGPT – Strong for general purpose work, emotional intelligence (4.5 version), lots of plug-ins, expensive to scale

Deepseek – High performance & cost effective, security concerns, content filters, lacks features, advanced reasoning

Perplexity – High accuracy and citations, sourced answers, search, weak for deep conversation and creative tasks

GROK – Integrated with X platform, informal ‘edgy’ style, coding, lacks native image recognition.

All in, AIs will not save the world, nor are they a panacea that will relieve us from the burden of having to do things that take work. AIs are limited in what they can do but are promoted, intentionally or not, to be the final step in the evolution of humans from cave men always looking to find their next meal to the unburdened dilettante that can idle away the hours contemplating butterfly wings or a blade of grass. We still have to work to make things happen and create the things that are unique, beautiful, and generate income, but AIs can help as long as folks understand what they can and cannot do. Perhaps this test and others like them will lead to a better understanding of what AIs are capable of, without over or under promising. It’s hard to keep from promoting your product when you have spent billions developing it, but the hard reality of what it can and cannot do remains the same regardless of the promotion.

AI Poetry (Question 9):

Within the hall, a shadowed, somber keep, Where time's dark pendulum doth softly weep, A titan stands, of ebony and dread, Whose hollow chime tolls for the silent dead. Each measured tick, a sigh of ages flown, A mournful dirge, upon a wind-wrung groan. The gilded face, a moon in midnight's shroud, Reflects the specters, gathering in the cloud. Its heavy hands, like claws of ancient night, Drag moments down to everlasting blight. And with each stroke, a whisper, cold and deep, "Remember, mortal, where thy secrets sleep.

The Eternal Timekeeper

In shadows dark where silence reigns supreme, The ancient clock its vigil keeps alone; With pendulum that swings as in a dream, And midnight chimes in somber monotone.

Its weathered face, like spectres from the tomb, Counts endless hours with hands of tarnished brass; While phantoms dance across the dusty room, As mortal moments mercilessly pass.

Each doleful stroke reverberates with dread, A knell that marks our journey to the grave; Its ceaseless rhythm echoes in my head— Time's cruel master, and we but its slave.

In twilight halls, a sentinel stands,

The grandfather clock, with solemn hands.

Its face a moon, with numbers pale,

Marking the hours, with mournful tale.

Its chimes, a dirge, that echoes through,

A melancholy sound, that whispers "Flee!"

Yet, we are drawn, to its steady beat,

A morbid fascination, that our souls repeat.

In darkness, it looms, a specter tall,

A reminder of time's relentless call.

Its tick, a countdown, to our final sleep,

A haunting presence, that our hearts do keep.

Beneath the shadows' wailing cry, Stood the clock with hollowed eye. Its pendulum swayed, a mournful knell, Whispering secrets it dared not tell.

Each tick, a dirge for hours lost, Each tock, a reminder of time's cruel cost. Its mahogany face, solemn and drear, Spoke of ages wrapped in fear.

Oh, grandfather of the ceaseless night, Your chime foretells no morning light. An eternal guardian, you softly moan, Marking hearts left cold, alone.

Upon the hearth where shadows creep,

There stands a clock so dark, so deep.

Its mournful chime, a hollow knell,

A whispered curse, a tolling spell.

Its pendulum, like fate, swings low,

A measured dirge of time’s cruel flow.

The midnight hour, a ghostly sigh,

As if the past still lingers nigh.

Its face is worn, its hands are slow,

Yet secrets in its echoes grow.

Oh, dread device of time’s cruel art,

Thy chime strikes terror in my heart!

In the shadowed hall, it stands alone,

A sentinel of time, its presence known.

With pendulum's swing, it whispers low,

Of moments lost, and futures slow.

Its chimes, like ghosts, in midnight air,

Echo tales of despair and care.

Each toll a knell, a mournful sound,

Of hours passed, to the cold ground.

Beneath its face, the hands do creep,

Through endless nights, where shadows sleep.

A timeless warden, it guards the years,

A silent witness to joy and tears.

Yet in its heart, a secret lies,

Of fleeting life, and swift goodbyes.

In twilight halls, where shadows play,

A sentinel of time stands tall today,

The grandfather clock, with solemn face,

Guards secrets of the past in a silent space.

Its chimes echo through the midnight air,

A mournful sound that whispers despair,

The hours tick by with a funeral pace,

As darkness gathers in a solemn place.

In moonlit nights, its shadow looms near,

A specter watching all who come and fear,

The clock's heartbeat marks the passage of time,

A reminder of mortality's dark rhyme.

In shadows deep, where silence dwells profound,

The grandfather clock with mournful chime doth stand,

Its pendulum swings o’er time’s unyielding ground,

A sentinel grim of fate’s relentless hand.

Each tick resounds, a dirge through hollow halls,

Each tock a knell for moments lost to gloom,

Its face, a skull, with eyeless stare enthralls,

Presiding o’er the heart’s eternal doom.

The midnight hour creeps forth in sable shroud,

Its brazen voice a raven’s dread refrain,

And trapped within its gears, the soul is cowed--

Forever bound to time’s unceasing chain.

RSS Feed

RSS Feed